These days, the biggest change to software development is the frequency of deployments. Product teams deploy releases to production earlier (and more often). Months or years-long release cycles are becoming rare—especially among those building pure software products.

Today, using a service-oriented architecture and microservices approach, developers can design a code base to be modular. This allows them to write and deploy changes to different parts of the code base simultaneously.

The business benefits of shorter deployment cycles are clear:

- Time-to-market is reduced

- Customers get product value in less time

- Customer feedback also flows back into the product team faster, which means the team can iterate on features and fix problems faster

- Overall developer morale goes up

However, this shift also creates new challenges for the operations or DevOps team. With more frequent deployments, it’s more likely that the deployed code could negatively affect site reliability or customer experience. That’s why it’s important to develop strategies for deploying code that minimize risk to the product and customers.

In this article, we’ll talk about a few different deployment strategies, best practices, and tools that will allow your team to work faster and more reliably.

Challenges of Modern Applications

Modern applications are often distributed and cloud-based. They can scale elastically to meet demand, and are more resilient to failure thanks to highly-available architectures. They may utilize fully managed services like AWS Lambda or Elastic Container Service (ECS) where the platform handles some of the operational responsibility.

These applications almost always have frequent deployments. For example, a mobile application or an consumer web application may undergo several changes within a month. Some are even deployed to production multiple times a day.

They often use microservice architectures in which several components work together to deliver full functionality. There can be different release cycles for different components, but they all have to work together seamlessly.

The increased number of moving parts mean more chances for something to go wrong. With multiple development teams making changes throughout the codebase, it can be difficult to determine the root cause of a problem when one inevitably occurs.

Another challenge: the abstraction of the infrastructure layer, which is now considered code. Deployment of a new application may require the deployment of new infrastructure code as well.

Popular Deployment Strategies

To meet these challenges, application and infrastructure teams should devise and adopt a deployment strategy suitable for their use case.

We will review several and discuss the pros and cons of several different deployment strategies so you can choose which one suits your organization.

"Big Bang" Deployment

As the name suggests, "big bang" deployments update whole or large parts of an application in one fell swoop. This strategy goes back to the days when software was released on physical media and installed by the customer.

Big bang deployments required the business to conduct extensive development and testing before release, often associated with the "waterfall model" of large sequential releases.

Modern applications have the advantage of updating regularly and automatically on either the client side or the server side. That makes the big bang approach slower and less agile for modern teams.

Characteristics of big bang deployment include:

All major pieces packaged in one deployment;

Largely or completely replacing an existing software version with a new one;

Deployment usually resulting in long development and testing cycles;

Assuming a minimal chance of failure as rollbacks may be impossible or impractical;

Completion times are usually long and can take multiple teams’ efforts;

Requiring action from clients to update the client-side installation.

Big bang deployments aren’t suitable for modern applications because the risks are unacceptable for public-facing or business-critical applications where outages mean huge financial loss. Rollbacks are often costly, time-consuming, or even impossible.

The big bang approach can be suitable for non-production systems (e.g., re-creating a development environment) or vendor-packaged solutions like desktop applications.

Rolling Deployment

Rolling, phased, or step deployments are better than big bang deployments because they minimize many of the associated risks, including user-facing downtime without easy rollbacks.

In a rolling deployment, an application’s new version gradually replaces the old one. The actual deployment happens over a period of time. During that time, new and old versions will coexist without affecting functionality or user experience. This process makes it easier to roll back any new component incompatible with the old components.

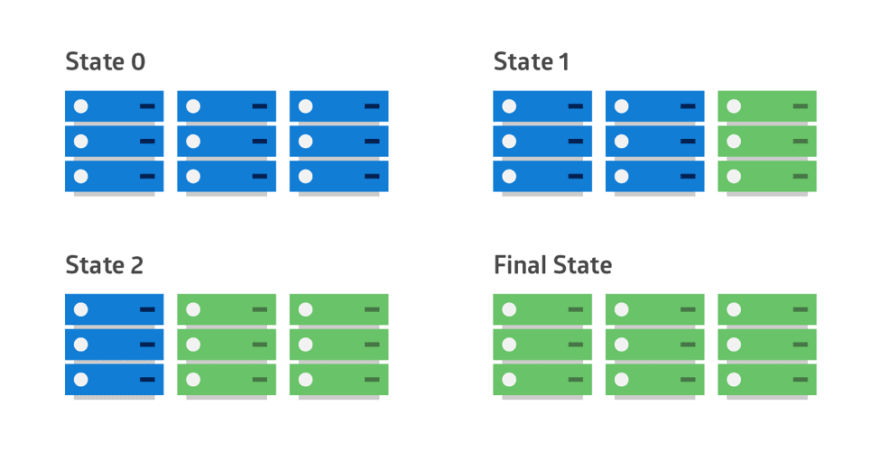

The following diagram shows the deployment pattern: the old version is shown in blue and the new version is shown in green across each server in the cluster.

An application suite upgrade is an example of a rolling deployment. If the original applications were deployed in containers, the upgrade can tackle one container at a time. Each container is modified to download the latest image from the app vendor’s site. If there is a compatibility issue for one of the apps, the older image can recreate the container. In this case, the new and old versions of the suite’s applications coexist until every app is upgraded.

Blue-Green, Red-Black or A/B Deployment

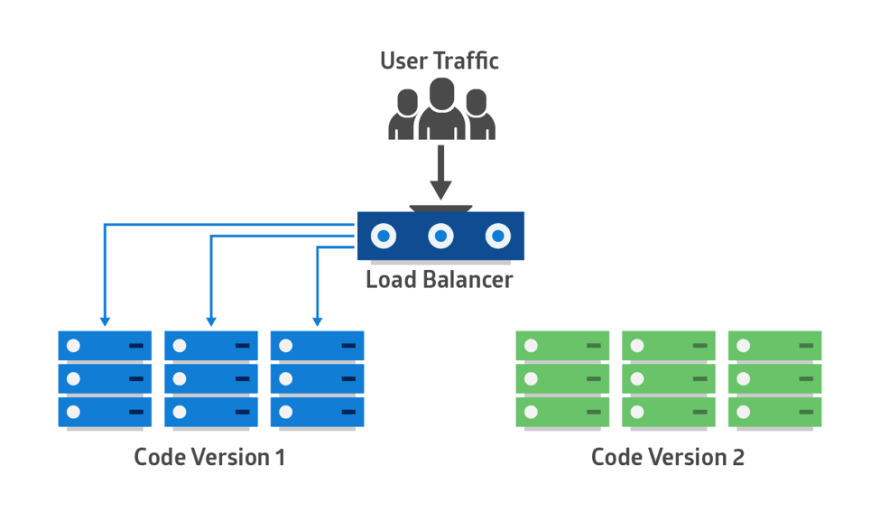

This is another fail-safe process. In this method, two identical production environments work in parallel.

One is the currently-running production environment receiving all user traffic (depicted as Blue). The other is a clone of it, but idle (Green). Both use the same database back-end and app configuration:

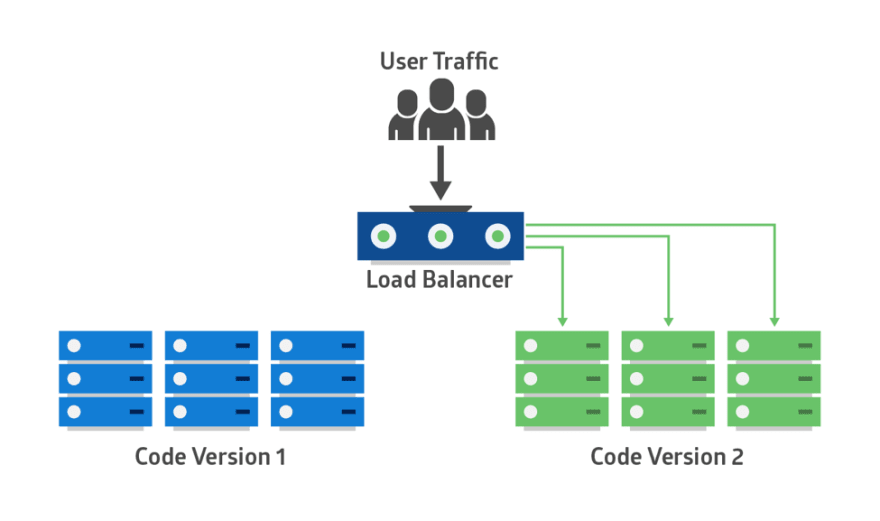

The new version of the application is deployed in the green environment and tested for functionality and performance. Once the testing results are successful, application traffic is routed from blue to green. Green then becomes the new production.

If there is an issue after green becomes live, traffic can be routed back to blue.

In a blue-green deployment, both systems use the same persistence layer or database back end. It’s essential to keep the application data in sync, but a mirrored database can help achieve that.

You can use the primary database by blue for write operations and use the secondary by green for read operations. During switchover from blue to green, the database is failed over from primary to secondary. If green also needs to write data during testing, the databases can be in bidirectional replication.

Once green becomes live, you can shut down or recycle the old blue instances. You might deploy a newer version on those instances and make them the new green for the next release.

Blue-green deployments rely on traffic routing. This can be done by updating DNS CNAMES for hosts. However, long TTL values can delay these changes. Alternatively, you can change the load balancer settings so the changes take effect immediately. Features like connection draining in ELB can be used to serve in-flight connections.

Canary Deployment

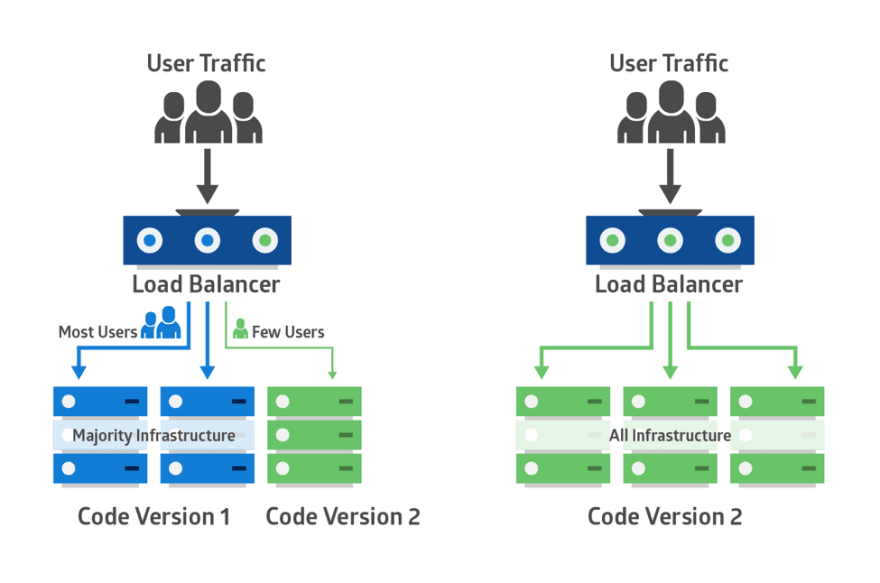

Canary deployment is like blue-green, except it’s more risk-averse. Instead of switching from blue to green in one step, you use a phased approach.

With canary deployment, you deploy a new application code in a small part of the production infrastructure. Once the application is signed off for release, only a few users are routed to it. This minimizes any impact.

With no errors reported, the new version can gradually roll out to the rest of the infrastructure. The image below demonstrates canary deployment:

The main challenge of canary deployment is to devise a way to route some users to the new application. Also, some applications may always need the same group of users for testing, while others may require a different group every time.

Consider a way to route new users by exploring several techniques:

Exposing internal users to the canary deployment before allowing external user access;

Basing routing on the source IP range;

Releasing the application in specific geographic regions;

Using an application logic to unlock new features to specific users and groups. This logic is removed when the application goes live for the rest of the users.

Deployment Best Practices

Modern application teams can follow a number of best practices to keep deployment risks to a minimum:

Use a deployment checklist. For example, an item on the checklist may be to "backup all databases only after app services have been stopped" to prevent data corruption.

Adopt Continuous Integration (CI). CI ensures code checked into the feature branch of a code repository merges with its main branch only after it has gone through a series of dependency checks, unit and integration tests, and a successful build. If there are errors along the path, the build fails and the app team is notified. Using CI therefore means every change to the application is tested before it can be deployed. Examples of CI tools include: CircleCI, Jenkins.

Adopt Continuous Delivery (CD). With CD, the CI-built code artifact is packaged and always ready to be deployed in one or more environments. Read more in our Low-Risk Continuous Delivery eBook.

Use standard operating environments (SOEs) to ensure environment consistency. You can use tools like Vagrant and Packer for development workstations and servers.

Use Build Automation tools to automate environment builds. With these tools, it’s often simple to click a button to tear down an entire infrastructure stack and rebuild from scratch. An example of such tools is CloudFormation.

Use configuration management tools like Puppet, Chef, or Ansible in target servers to automatically apply OS settings, apply patches, or install software

Use communication channels like Slack for automated notifications of unsuccessful builds and application failures

Create a process for alerting the responsible team on deployments that fail. Ideally you’ll catch these in the CI environment, but if the changes are deployed you’ll need a way to notify the responsible team

Enable automated rollbacks for deployments that fail health checks, whether due to availability or error rate issues.

Post-Deployment Monitoring

Even after you adopt all of those best practices, things may still fall through the crack. Because of that, monitoring for issues occurring immediately after a deployment is as important as planning and executing a perfect deployment.

An application performance monitoring (APM) tool can help your team monitor critical performance metrics including server response times after deployments. Changes in application or system architecture can dramatically affect application performance.

An error-monitoring solution like Rollbar is equally essential. It will quickly notify your team of new or reactivated errors from a deployment that could uncover important bugs requiring immediate attention.

Without an error monitoring tool, the bugs may never have been discovered. While a few users who encounter the bugs will take the time to report them, most others don’t. The negative customer experience can lead to satisfaction issues over time, or worse, prevent business transactions from taking place now.

An error monitoring tool also creates a shared visibility of all the post-deployment issues among Operations / DevOps teams and developers. This shared understanding allows the teams to be more collaborative and responsive.

Originally published on rollbar.com

Top comments (14)

Hi ,

I am the editor of InfoQ China which focuses on software development. We

like your articles and plan to translate it.

Before we translate it into Chinese and publish it on our website, I

want to ask for your permission first! This translation version is

provided for informational purposes only, and will not be used for any

commercial purpose.

In exchange, we will put the English title and link at the beginning of

Chinese article. If our readers want to read more about this, he/she can

click back to your website.

Thanks a lot, hope to get your help. Any more question, please let me

know.

Yes if you add a canonical link to rollbar.com/blog/deployment-strate...

ok,thanks

Hi Jason,

Thanks for the article. I have an issue with Blue-Green deployment which I'm trying to implement. The application I'm working on has several components such as an Angular SPA front-end, public-facing APIs and database.

I am fine with pointing both blue and green to the same production database. My problem is with the APIs. When I deploy to staging.mydomain.com my staging frontend is also pointing to production instead of staging APIs. If I deploy with staging configuration values, I don't currently have a way of changing those values without re-deploying.

So basically I can manage switching 1 ELB but I'm struggling when different components behind different ELBs need to speak with each other.

What would you recommend to address such a scenario?

Thanks again for the insightful article.

Kind regards,

Volkan

Hey Volkan, first of all staging environments are a bit of a different concept since blue-green deployments are typically to production environments. Typically staging environments are considered temporary and you have a separate deployment to production.

To address your question through, I'll have to guess what you mean by "staging configuration values". One simple solution is to add some environment variables (or other configuration) for staging environments that tell the app where to find the appropriate services. For example, you might include the host name for your API as an environment variable, then you'd have one value for staging and a different one for production. Alternatively, if your staging app is in its own VPC or internal network, you can use the same host name to connect to your API in both environments, but just have separate instances for each environment. More sophisticated environments might use service discovery or a service mesh to dynamically route to the right API.

Hope that helps!

Hi Jason,

Thanks for your response.

I guess I used the terminology incorrectly. By staging I essentially mean the green production environment.

That's indeed helpful.

Kind regards,

Volkan

What about db schema migration? How do you handle that?

Hi Erik,

DB schema migration can be part of any of these approaches too.

With Big Bang, the DB schema is changed at the same time the newer version of the application is deployed

With Rolling, Blue-Green or Canary, the new app's logic is uses a conditional branching to access a new DB schema which exists side-by-side with old schema. Once the app is fully released, another small change removes that conditional access to DB, so the new DB schema is always accessed.

In terms of how the DB schema is rolled out during deployment, this is done the same way app code is rolled out - via a Continuous Integration path where a package containing both app and database code is deployed.

Hope this answers your question.

-

@Sadequl Hussain : but what kind of replication do you use for the two database schemas that both receive inserts and updates from the users? If you have some kind of bidirectional replication how do you prevent the DDL part from the new schema "leaking" too early to the old schema?

@mostlyjason Nice article. One thing though - on Azure blog (azure.microsoft.com/en-us/blog/blu...) they describe Blue-Green deployment completely differently. They state:

What they described looks a lot like your "Canary deployment" definition. Your definition of Blue-Green is about switching ALL traffic to the new servers.

What's the truth? And what is actually the source of truth for these concepts?

Hi,

thanks for the clear and informative article.

our product is composed by several micro-services. some of them are 'workers' services which are actually a scheduler task (lambda) that runs every minute get request from db, execute it and update the status.

we want to adopt the blue/green deployment, and wonder how to apply it with those workes.

the only option we think of is adding a version column to the request so each deployment version process its own requests.

but in that approach some request may never be completed, if the old version is deleted while some request are not finished yet, they will not be taken by the new 'worker' , since the new worker looks only at request with the new version.

can you suggest solution for that? or a different approach?

Thats a brilliant post. Thanks for the precise insights on the major deployment strategies being followed in the world of CI/CD these days.

Various aspects of canary deployment with queue workers are covered here: varlog.co.in/blog/canary-deploymen...