Kubernetes is an open-source system for automating deployment, scaling and management of containerized applications.

In this post, you will get an overview of the K8s architecture. If you are coming from software engineering and want to get a first understanding of how K8s works: this post is for you.

Terminology.

Namespace.

a group of resources. for every group resource quotas can be set with the LimitRange admission. Also, user permissions can be applied.

K8s clusters can be created in a namespace or cluster-scoped.

Two objects can not have the same Name value in a namespace

Context.

This consists of the user, cluster name (eg dev and prod) and namespace. It is used to switch between permissions and restrictions.

The context information is stored in ~/.kube/config.

Resource limits.

limits can be set per namespace and pod. The namespace limits have priority over pod spec.

Pod security admission.

There are 3 profiles: privileged, baseline, restricted policies

Network policies.

Ingress and Egress traffic can be limited according to namespaces and labels or addresses.

K8s API Flow.

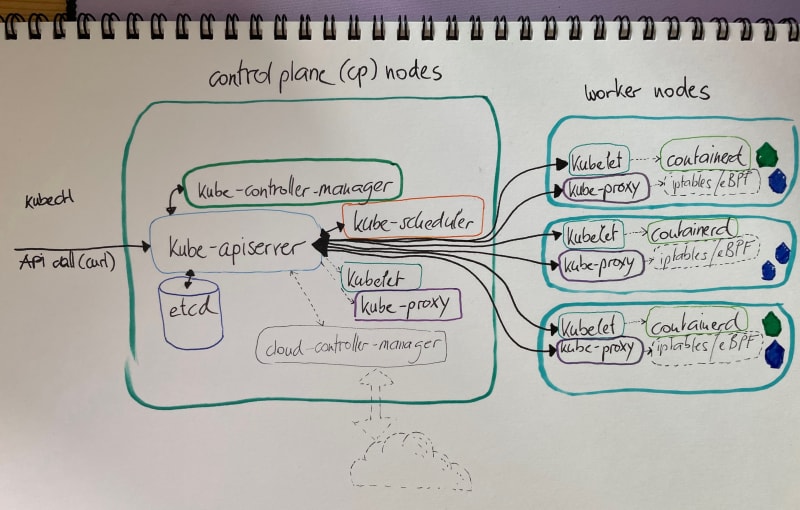

In the following sections, the parts of the control plane and the worker nodes will be explained.

Control plane node components

kube-apiserver

is the central part of a K8s cluster. All calls are handled on this server. Every API call passes three steps: authentications, authorization, and admission controllers.

Only the kube-apiserver connects to the etcd database.

kube-scheduler

scans available resources (like CPU, memory utilization, node health and workload distributions) and makes informed decisions which node will host a Pod of containers.

It monitors the cluster and makes decisions based on the current state

etcd database

is a key-value store that stores the state of the cluster, networking and persistent information is stored.

kube-controller-manager

is a core control loop daemon that interacts with the kube-api-server to determine the state of the cluster. If the state does not match, the manager contacts the necessary controllers to match the desired state. There are several controllers in use, like endpoints, namespace and replication.

cloud-controller-manager

can interact with agents outside of the cloud. It allows faster changes without altering the core K8s control process (see kube-apiserver).

Each kubelet must use the --cloud-provider-external settings passed to the binary.

Worker node components

kubelet

interacts with the underlying Docker Engine (installed on all nodes) and ensures that all containers are running as desired.

It accepts the API calls for Pod specifications and configures the local node until the specification has been met.

For example, if a Pod needs access to storage, Secrets or ConfigMaps, the kubelet will make this happen.

It sends back the status to the kube-apiserver to be persistent in the etcd.

kube-proxy

manages the network connectivity to the containers via iptables (IPv4 and IPv6). A 'userspace mode' monitors Services and Endpoints.

logging

Currently, there is no cluster-wide logging. Fluentd can be used to have a unified logging layer for the cluster.

metrics

run kubectl top to get the metrics of a K8s component.

If needed Prometheus can be deployed to gather metrics from nodes and applications.

container engine

A container engine for the management of containerized applications, like containerd or cri-o.

Pods

are the smallest units we can work with on K8s. The design of a pod follows a one-process-per-container architecture. A pod represents a group of co-located containers with some associated data volumes.

Containers in a pod start in parallel by default.

special containers:

- initContainers: if we want to wait for a container to start before another.

- sidecar: used to perform helper tasks, like logging.

single IP per Pod

All containers in a pod share the same network namespace. You can not see those containers on K8s level, only on the pod level.

The containers use the loopback interface, write to files on a common filesystem or via inter-process communication (IPC).

Services

are flexible and scalable operators that connect resources. Each service is a microservice handling a particular bit of traffic, like a NodePort or a LoadBalancer to distribute requests. They are also used for resource control and security.

They use selectors to know which objects to connect. These selectors can be:

- equality-based: =, ==, 1=

- set-based: in, notin exists

Operators

aka watch-loops aka controllers query the current state against the given spec and execute code to meet the spec.

A DeltaFIFO queue is used. The loop process only ends if the delta is the type Deleted.

Networking Setup

ClusterIP

is used for the traffic within the cluster.

NodePort

creates first a ClusterIP and then associates a port of the node to that new ClusterIp.

LoadBalancer

if a LoadBalancer Service is used, it will first create a ClusterIP and then a NodePort. Then it will make an async request for an external load balancer. If the external is not configured to respond, it will stay in pending state.

Ingress Controller

acts as a reverse proxy to route external traffic to the assigned services based on the configuration. So its key responsibilities are:

- Routing and Load Balancing

- TLS Termination

- Path-Based Routing

- Virtual Hosts

- Authentication and Authorization

Video about the K8s API: https://www.youtube.com/watch?v=YsmgB2QDaUg

Container Network Interface (CNI) Configuration File

It is the default networking interface mechanism used by kubeadm, which is the K8s cluster bootstrapping tool.

It is a specification to configure container networking communications, provide a single IP per pod and remove resources when a container is deleted.

The CNI is language-agnostic and there are many different plugins available.

Now you have a first overview of the architecture of K8s.

You learned about the difference between the control plane and the worker nodes and its components. With the knowledge of this terminology in place, you can start to get into the details and run a cluster yourself.

If you already working with the K8s API, remember for now kubectl --help is your best friend. As kubectl offers more than 40 arguments you can explore all of these with the --help flag. For example kubectl taint --help. You will get your information faster there because chatgpt and bard tend to talk a lot and say so little.

In the next post of this series, I will write about how to build a K8s cluster. See you there.

Dig deeper:

official documentation

K8s API Flow explained in a beautiful video

concepts of cluster networking

Top comments (0)